Digital transformation has turned the smartphone into a sophisticated sensor capable of interpreting the physical world. From the retail floor to the domestic environment, image recognition is no longer a "futuristic" add-on, but a baseline expectation.

However, as these technologies scale, a "vision-reality disconnect" often emerges. While features like AR placement can boast 98% scale accuracy, the transition from a "digital twin" to a physical product is fraught with challenges.

A well-implemented image recognition feature can be a great way to conquer the market. Drawing from our experience and in-depth user review research, we identify the potential bottlenecks in the implementation of particular features, from self-service to product discovery, and describe ways to prevent them.

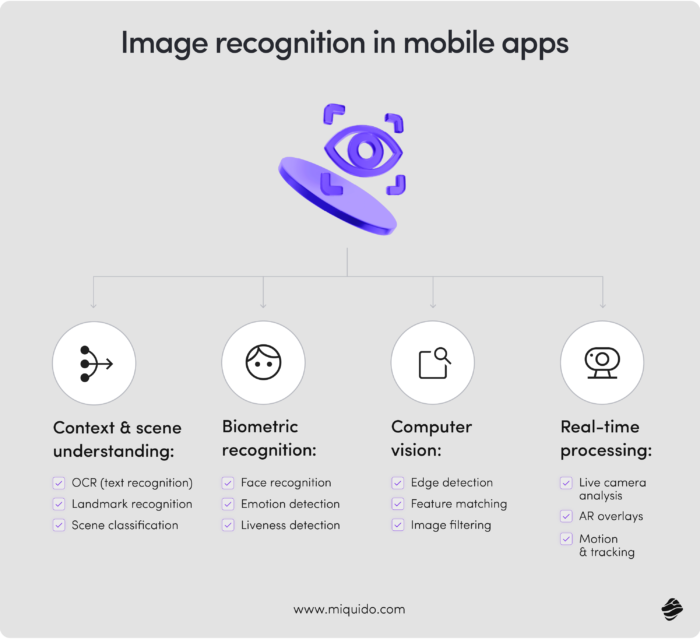

Mobile vision in a nutshell: The foundation of image recognition software

Image recognition is a multi-layered domain. To build a successful app, a partner must understand the specific computational methods and image recognition use cases – from retail visual search to automated medical diagnostics – required for modern deployment:

- Computer vision & edge detection: Simplifying images to extract geometric constructs. Developers often leverage cloud-scale power like Microsoft Azure Computer Vision to handle complex spatial analysis before optimizing for the device.

- Feature matching: Using descriptors like SIFT to find matches in a database. This is critical in image recognition software development, where convolutional neural networks are now the standard for extracting high-level features that traditional algorithms might miss.

- Real-time image processing: Live camera analysis at 60fps. This technology allows apps like Google Lens to translate text or identify products instantly, a feat made possible by optimizing artificial intelligence models to run locally on mobile hardware.

- Context u: Utilizing OCR and scene classification. This separates a basic scanner from the best image recognition app, which understands the "vibe" of a scene rather than just raw data.

Refining the development pipeline

Building these apps to identify objects requires more than just code; it requires a robust training pipeline. Successful artificial intelligence depends heavily on high-quality data annotation, where thousands of images are meticulously labeled to teach the software what it’s looking at.

To streamline this, many developers build their models using PyTorch, as its flexibility allows for faster iteration between training and real-world testing. This ensures that the final product is not only accurate but also adaptable to the ever-changing environments a mobile user might encounter.

Self-service features: Image identification supporting customer autonomy

Self-service functions are becoming increasingly common, especially in Europe, which is following in the footsteps of the USA and more and more intensely expanding their spectrum in its applications.

It's high time – as the Miquido report shows, 60% of users prefer handling simple issues themselves rather than talking to an agent, while 46% feel frustrated when self-service options are missing.

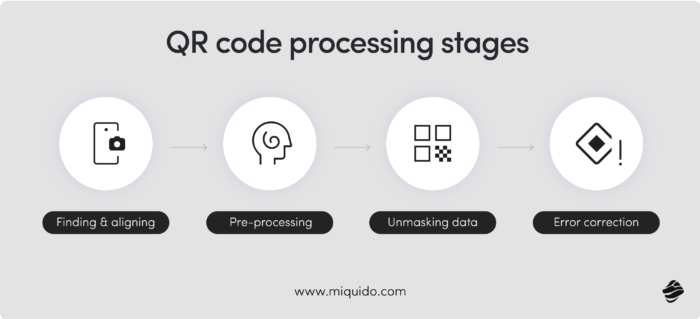

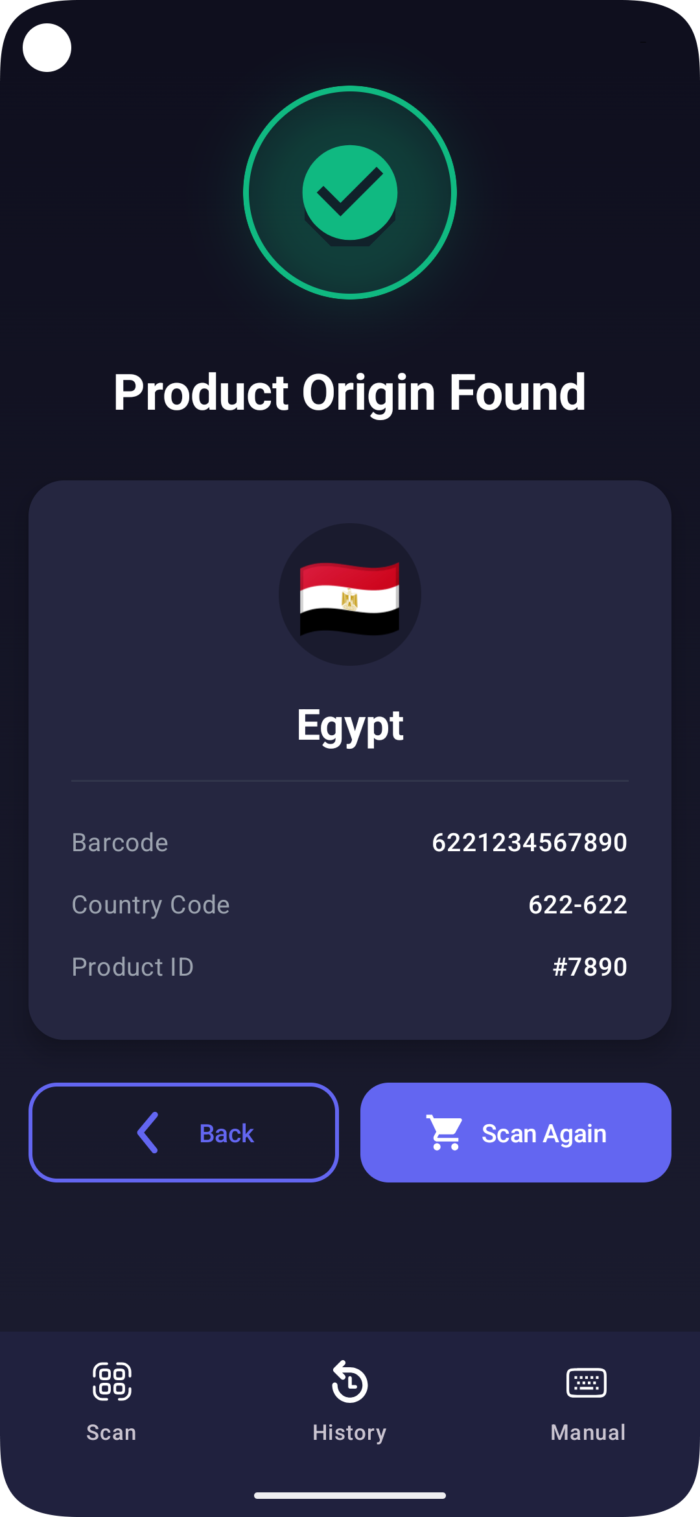

Implementing these functions would not be possible without the use of image recognition in mobile technology. Barcode and QR scanning are their basic functions, enabling users maximum autonomy. Self-service pickup is also becoming increasingly popular, cutting costs and allowing users to avoid queues, which is especially important in places like airports, where passengers want to jump into their rental car as quickly as possible. Here, QR codes and scanning documents to extract data from them are also key.

This is an opportunity, but also a challenge – image recognition related bottlenecks and socio-technical vulnerabilities can significantly lower the quality of the customer experience, stripping the user of the key benefit: saving time. In such cases, they may feel that the company is saving at their expense, forcing them to do free work. Let's consider how to implement these functions with maximum benefit for both parties.

Self-checkout (Scan & Go) & real-time sync

Self-service shopping is already the norm in many regions on both sides of the Atlantic. The USA and China lead this trend, promoting fully self-service stores, while Europe tends toward hybrid solutions. This has become possible thanks to the presence of self-service checkouts and kiosks, heavily relying on image recognition functions.

However, such solutions have their downsides. Firstly, they do not eliminate queues entirely; secondly, they can be a field for abuse. Let's use the Target case: At Target kiosks, shoppers have reported an exploit where a previous user initiates a "pay with wallet" session and walks away. This causes the next user to accidentally pay for the previous person's unfinalized items, such as a "Harry Potter LEGO set," when they scan their own barcode.

The solution to this problem could be real-time mobile solutions. Examples are already visible on the European market, especially the self-checkout function in the Empik Premium Pay&GO app or Rossmann GO, which allows you to shop independently in drugstores by scanning barcodes with your smartphone, offering two payment options: directly in the smartphone or at the checkout by scanning a collective purchase QR code.

The most common challenge on the user side here is not so much image recognition itself, but the interface. For example, users of this type of app report that discounts are not applied in real-time. They must hit "finish to pay" just to see the corrected price, then navigate back to continue scanning.

Systems that synchronize OCR item detection with the pricing engine in milliseconds, allowing users to see coupons apply as they scan, rather than at the end of the transaction, can guarantee a competitive advantage in this regard.

To ensure the scan-and-go function is also secure for the company, it is important to integrate it with automatic product detection systems. Amazon uses its "Just Walk Out" technology, which employs sophisticated overhead computer vision and weight sensors on shelves to detect exactly when an item is taken or returned, as documented in their technical overviews of Amazon Go stores.

No-contact payments with loyalty program integration

Collecting points during physical shopping usually requires a card or app to be scanned by a cashier or an automated kiosk. In the case of gas stations, however, this can be problematic. We all know the problem: a queue to pay, a traffic jam at the station, you wait unnecessarily for a refueling spot to open up.

Circle K solves this problem by combining image recognition and Bluetooth technologies. The use of Bluetooth beacons enables automatic customer recognition and contactless service delivery. Before installing the beacon, the subscriber would have to scan a QR code, likely opening the vehicle window to capture the image. In the event the customer does not want to scan the QR code, they are asked to enter the number provided on the terminal.

The primary challenge involves environmental interference, such as glare on the screen or poor signal in remote areas. This is solved by using hybrid authentication, allowing the system to fall back on manual input or license plate recognition via external station cameras if the initial scan fails.

Automatic evaluation of returns (The "damage" AI)

The returns process in online shopping is inherently problematic. Retailers like Amazon are exploring "photo-first" returns to solve reverse logistics.

These models use Anomaly Detection to look for micro-stains or scuffs. However, 2D photos lack depth and structural awareness. Implementing guided photo capture with AR overlays (arrows and outlines) that force users to capture specific angles, ensuring that "hidden damage" (like a broken axle in a vehicle) isn't missed.

How to stand out? Most current return systems only allow single photo uploads, slowing down documentation. The market needs "automated triage batching"– features that use video-to-3D reconstruction to let a user simply "walk around" the item, creating a digital twin of the damage for instant, indisputable claim processing.

However, there is another side to the coin. With access to GenAI, users can falsify product problems and thus extort refunds. Such cases are unfortunately happening more and more often. Therefore, algorithms will have to evolve or generated images should be digitally stamped, making such falsification impossible. Currently, solutions like C2PA (Coalition for Content Provenance and Authenticity) and digital watermarking are emerging to track the "provenance" of an image, though they are not yet universal in retail apps.

Product discovery features: trying on and fitting in with mobile image recognition app

Without image recognition, we would also lack other important functions that have completely changed the way we shop. They allow us to avoid unsuccessful purchases, which is a benefit on many fronts – it limits returns and complaints, and therefore unnecessary waste, costs, and carbon footprint.

However, although technology is progressing, many companies have tested such functions only to withdraw them shortly after. Revolutionary in intent, they sometimes turn out to be a flop because they work too slowly or do not guarantee a sufficiently realistic preview. A smartphone empowers the user to enjoy them but can also condition their experience due to its capacities. Most, however, is in the hands of your developers and designers.

Let's look at what users of individual image-recognition-powered product discovery functions most often have problems with and how they can be remedied.

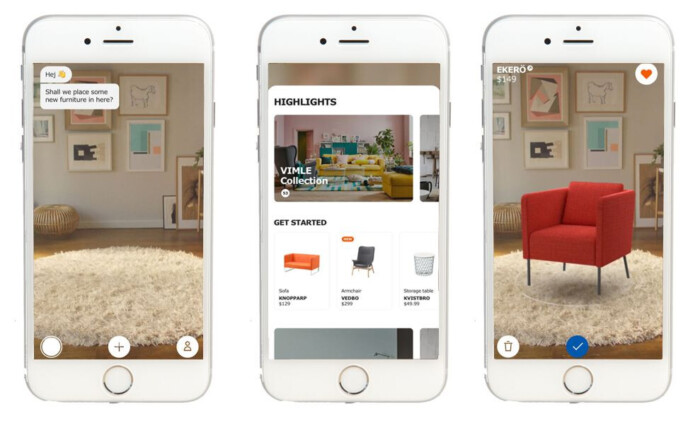

AR product placement in image recognition applications

Anyone who curses the labyrinth of IKEA corridors surely breathed a sigh of relief when it turned out that they could now "try on" furniture without leaving home. Wayfair introduced a similar improvement with Decorify. Just open the app, authorize the smartphone camera, and a virtual piece of furniture in realistic dimensions will fit into the processed image in real-time.

Brilliant in its simplicity? Yes and no. To ensure such a solution does not mislead customers, many aspects must be taken care of at the design stage. First: dimensions. This is the most critical issue, because it may turn out that the furniture simply does not fit in the dedicated space and the customer will be forced to return it.

Technically, the 3D model is scaled using the smartphone's ToF (Time-of-Flight) sensor or ARCore/ARKit scaling, which calculates the distance between the lens and the floor. To guarantee maximum accuracy, the app should require the user to calibrate the space by scanning a known object (like an A4 piece of paper) or by performing a multi-angle scan of the floor.

Another important issue: lighting. The app must take into account the room conditions captured by the camera, exposure, light tone, etc., matching the color of the inserted furniture to the surroundings so as not to misrepresent its appearance. This is achieved through environmental HDR probes that capture light data from the camera feed and apply it as a shader to the 3D object.

These small details can decide user satisfaction. It is also crucial to ensure intuitive user experience design and proper onboarding. For example, users of such apps often experience confusion during the room-mapping phase. If a room is too dark or the floor too reflective, SLAM (Simultaneous Localization and Mapping) algorithms fail to anchor objects. This can easily be prevented – simple instructions at the beginning are enough to avoid frustration during use.

Another problem that appears in user reviews is the inventory silo. A common user frustration is using augmented reality to confirm a fit, only to find the item is out of stock or cancelled after purchase. In an ideal scenario, the app allows for trying on furniture, but with an appropriate annotation or marking for an unavailable article, so as not to frustrate the user who wants to make a purchase. The app layer responsible for the AR experience should be connected to the inventory in real-time, just like the online shop.

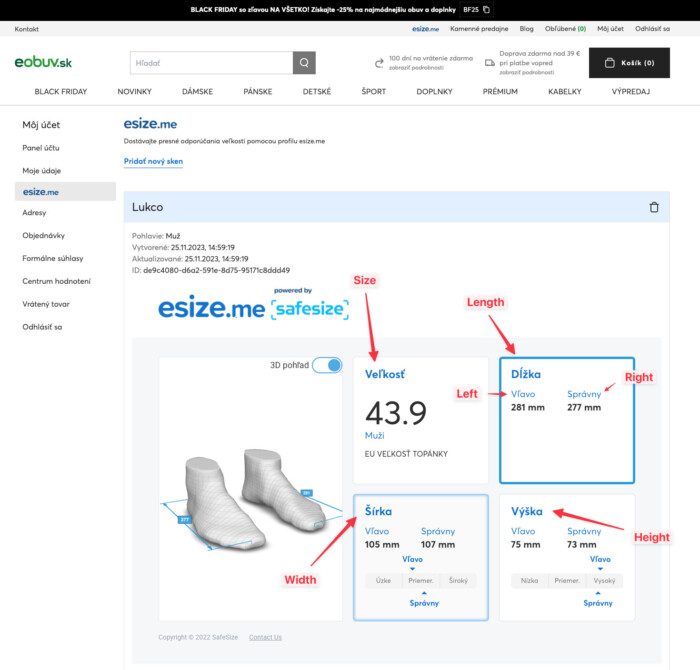

Body scanning

Another aspect with huge potential is body scanning, whether for fitting purposes or for planning nutrition and exercise. In-depth image analysis allows for estimating unique body build features, fat content, and body mass, and proposing adequate solutions based on that.

For example: the biggest challenge in footwear is that "Size 40" is not a universal constant. Systems like esize.me capture foot length and width with 3D precision, even accounting for natural growth cycles in children. However, so far, these scans can only be performed in physical stores at this point, while their mobile accessibility could be a significant sales driver.

Challenges? When using the body scanning features, users report that measurements can be "way off" compared to tape measures, often overestimating waist size by 5 inches due to shadows or improper camera angles. Edge AI quality gates could solve this issue.

Most apps let users take a "bad" photo and then give a "bad" result. A market-leading solution would use real-time edge processing to prevent the scan from even starting until the lighting, posture, and clothing contrast are objectively perfect, drastically reducing the 10% fat-percentage variance often seen in current apps.

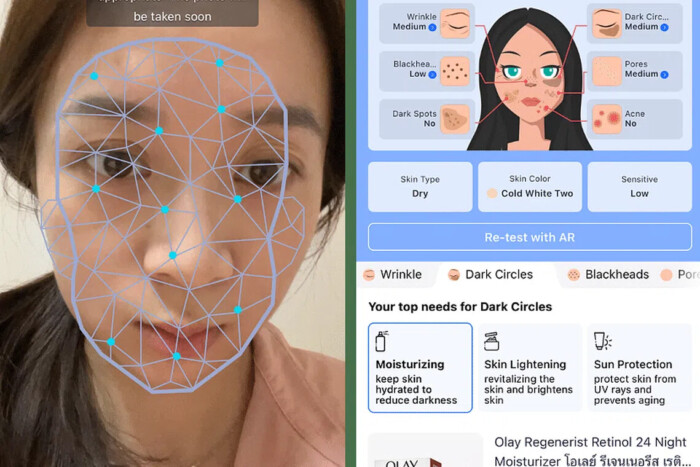

The "skin health" diagnostic

A skin scan is an increasingly popular function used by cosmetic companies as a value-added service and the first stage of the sales funnel. Recommended products can be woven into the results, but the user also gets a solid dose of knowledge, which can build a long-term positive relationship.

While this is a function with great potential, it is also a great challenge. This is perhaps the most personal application of image recognition, analyzing 150+ biomarkers including texture, moisture, and wrinkle depth.

An interesting example is the algorithm falling into the trap of optical illusion. Users report significant frustration with "shade matching." A common complaint involves the camera focusing on a user's temple – often the darkest, most "sun-kissed" part of the body – and then recommending foundation that is too dark for the rest of the face.

Algorithms are not infallible, especially when it comes to skin. Variations in skin tone and facial appearance (e.g., freckles, redness, or sunspots) can throw off detection thresholds. Lighting and shadows are often misinterpreted by the neural network as hyperpigmentation or acne. To solve this, developers can implement multi-point sampling that averages tones across the forehead, cheeks, and chin while using color-calibration cards to normalize for lighting conditions.

How to stand out if not through absolute accuracy (which is hard to achieve)? A bold solution, still lacking among brands on the market, would be skin chronology tracking. Most apps offer a one-off analysis. Users would appreciate visual history tools that allow users to compare side-by-side scans over months to verify if a treatment is actually reducing redness or hydration loss, backed by 95% test-retest reliability.

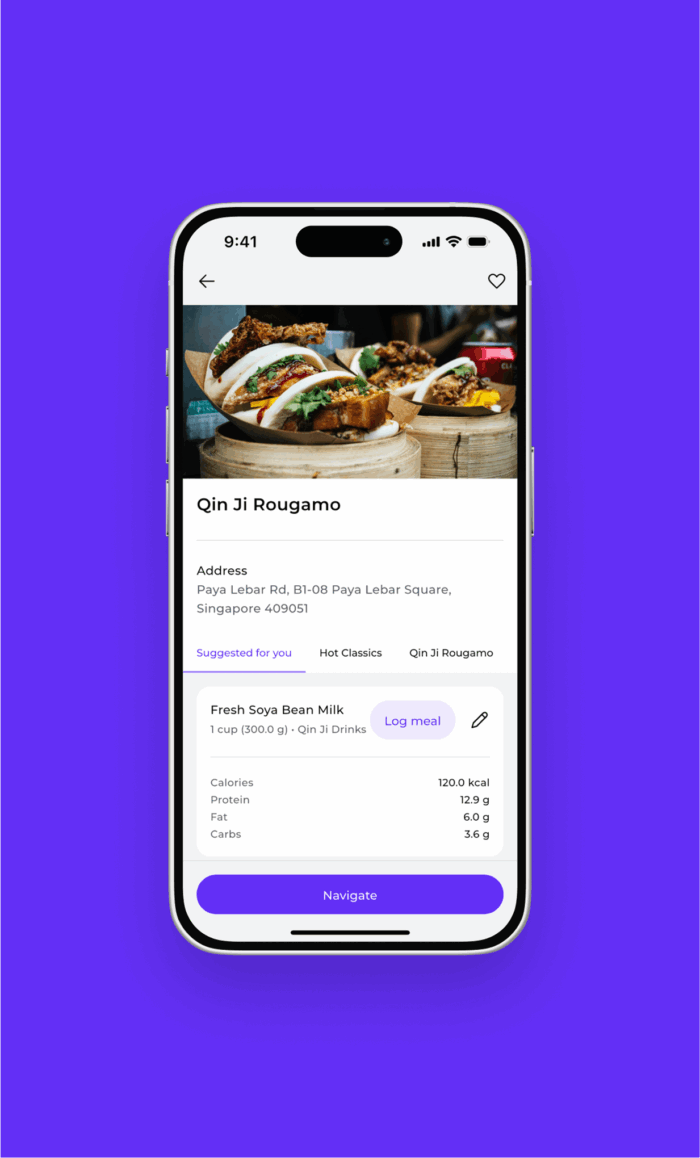

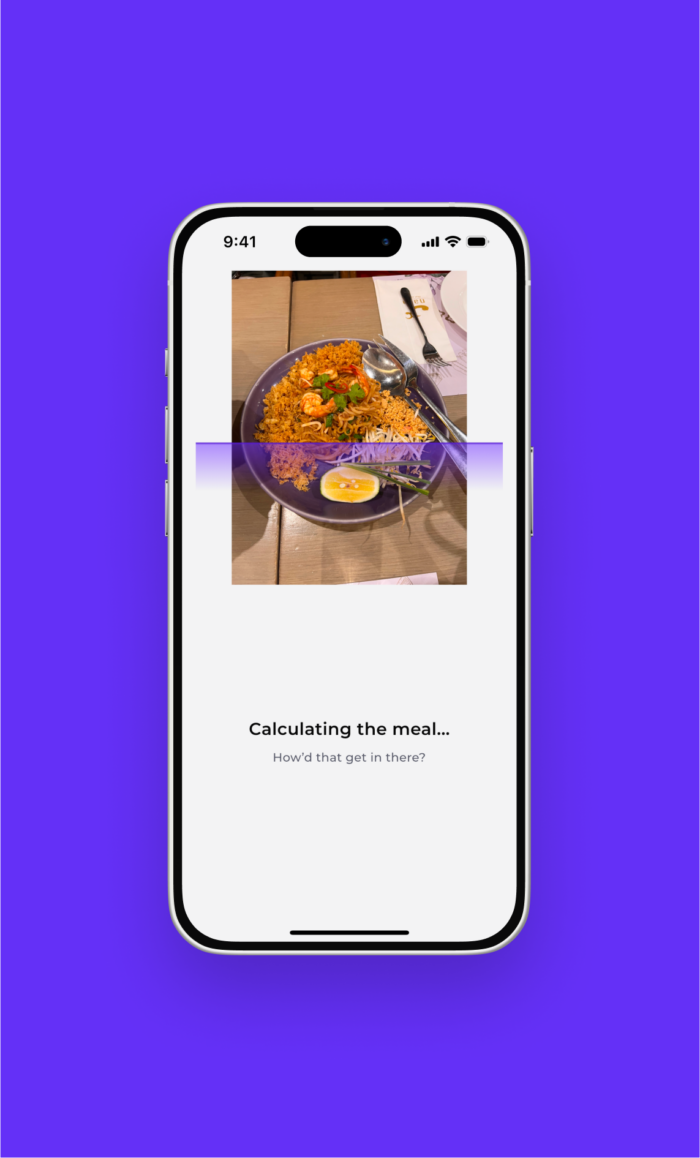

Health & nutrition scanning: AI photo recognition challenges

While the industry promises "instant logging," the reality is often a frustrated user staring at a screen that thinks their artisanal sourdough is a plain slice of white bread. The gap between a pixel and a calorie is a chasm filled with hidden oils, obscured portions, and the "black box" of culinary chemistry.

Most apps fail because they treat food recognition like facial recognition. But while a face stays relatively consistent, a meal is a 3D volume of varying densities. The approach Miquido applied when developing Ventrickle's nutrition app – using lightweight local models backed by broader AI – aims to bridge this gap, but the technical "heavy lifting" happening behind the scenes is immense.

Here is how the machine actually "sees" your dinner, step by step:

- The X-ray vision (segmentation): The AI attempts to digitally "carve" the meal, separating the rice from the gravy and the chicken from the garnish.

- The 3D guess (volumetric estimation): Since a photo is 2D, the AI looks for "anchors" – like the size of your spoon or the rim of the plate – to guess how deep that pile of mashed potatoes really is.

- The density math: The AI applies a "weight" to the volume. It knows that a cup of spinach is mostly air and water, while a cup of peanut sauce is a heavy, calorie-dense fuel.

- The culinary logic: Finally, it queries its "general knowledge" (like OpenAI’s database) to infer what it can’t see. If it identifies Nasi Lemak, it knows there is likely coconut milk hidden in the rice and sugar in the sambal.

The biggest challenge to accuracy isn't a blurry photo – it’s transparency. You can photograph two identical-looking plates of stir-fried bok choy. One was steamed with a drop of sesame oil; the other was drenched in lard. To a camera, they look exactly the same. This "invisible fat" is where calorie estimations fall apart. Because AI cannot taste the food or see the prep method, it relies on averages, which can lead to an error margin of 20% to 50%.

The "good vs. bad" binary

Nutrition apps use OCR to assign health scores, but experts warn this can be "arbitrary at best." The app may rate cheese ravioli as "good" while rating string cheese as "poor" based on selective metrics like saturated fat, ignoring the protein and calcium benefits of the cheese.

Where’s the opportunity for you? Instead of "Red Light/Green Light" binary systems, the market is ready for nuanced nutritional coaching. This means providing users with professional dietary context and raw data rather than an arbitrary score, helping to prevent the "fear-mongering" and disordered eating patterns associated with current models.

Image recognition apps that support your growth

You already know the potential challenges – us too, and we are not afraid to face them! Moreover, we can design image recognition features that make your mobile app stand out in its industry, as we did with Ventrickle. Let's discuss the needs of your users and find ways to tackle them in the most seamless way possible with our mobile app development.

![[header]recognition in mobile apps challenges & solutions (1)](https://www.miquido.com/wp-content/uploads/2026/01/headerrecognition-in-mobile-apps_-challenges-solutions-1-1920x1280.jpg)

![[header]recognition in mobile apps challenges & solutions (1)](https://www.miquido.com/wp-content/uploads/2026/01/headerrecognition-in-mobile-apps_-challenges-solutions-1-432x288.jpg)

![[header] gamification in retail rewriting the shopping experience with gamified mobile apps (1)](https://www.miquido.com/wp-content/uploads/2026/01/header-gamification-in-retail-rewriting-the-shopping-experience-with-gamified-mobile-apps-1-432x288.jpg)

![[header] how to reduce churn on a form validation stage (1)](https://www.miquido.com/wp-content/uploads/2025/12/header-how-to-reduce-churn-on-a-form-validation-stage_-1-432x288.jpg)